H3DViRViC Journals Results

IEEE Computer Graphics and Applications, Vol. 45, pp 90--99, 2025.

DOI: http://dx.doi.org/10.1109/MCG.2024.3517293

DOI: http://dx.doi.org/10.1109/MCG.2024.3517293

BibTeX

AI is the workhorse of modern data analytics and omnipresent

across many sectors. Large Language Models and multi-modal foundation models are today capable of generating code, charts, visualizations, etc. How will these massive developments of AI in data analytics shape future data visualizations and visual analytics workflows? What is the potential of AI to reshape methodology and design of future visual analytics applications? What will be our role as visualization researchers in the future? What are opportunities, open challenges and threats in the context of an increasingly powerful AI? This Visualization Viewpoint discusses these questions in the special context of biomedical data analytics as an example of a domain in which critical decisions are taken based on complex and sensitive data, with high requirements on transparency, efficiency, and reliability. We map recent trends and developments in AI on the elements of interactive visualization and visual analytics workflows and highlight the potential of AI to transform biomedical visualization as a research field. Given that agency and responsibility have to remain with human experts, we argue that it is helpful to keep the focus on human-centered workflows, and to use visual analytics as a tool for integrating “AI-in-the-loop”. This is in contrast to the more traditional term “human-in-the-loop”, which focuses on incorporating human expertise into AI-based systems.

Computer Graphics Forum, Vol. 44, Num. 5, pp e70201, 2025.

DOI: http://dx.doi.org/10.1111/cgf.70201

DOI: http://dx.doi.org/10.1111/cgf.70201

BibTeX

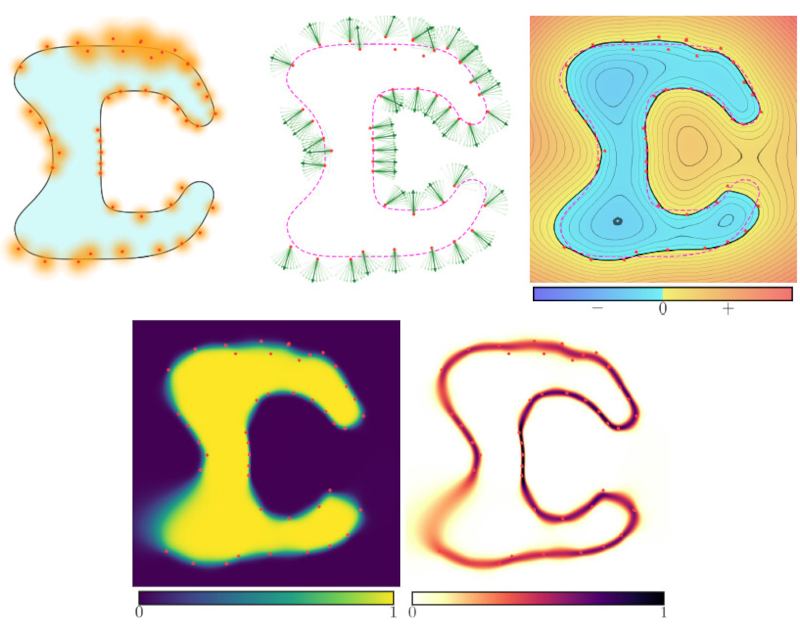

Reconstructing three-dimensional shapes from point clouds remains a central challenge in geometry processing, particularly due to the inherent uncertainties in real-world data acquisition. In this work, we introduce a novel Bayesian framework that explicitly models and propagates uncertainty from both input points and their estimated normals. Our method incorporates the uncertainty of normals derived via Principal Component Analysis (PCA) from noisy input points. Building upon the Smooth Signed Distance (SSD) reconstruction algorithm, we integrate a smoothness prior based on the curvatures of the resulting implicit function following Gaussian behavior. Our method reconstructs a shape represented as a distribution, from which sampling and statistical queries regarding the shape's properties are possible. Additionally, because of the high cost of computing the variance of the resulting distribution, we develop efficient techniques for variance computation. Our approach thus combines two common steps of the geometry processing pipeline, normal estimation and surface reconstruction, while computing the uncertainty of the output of each of these steps.

Heliyon, Vol. 10, Num. 22, pp e39692, 2024.

DOI: http://dx.doi.org/10.1016/j.heliyon.2024.e39692

DOI: http://dx.doi.org/10.1016/j.heliyon.2024.e39692

BibTeX

Virtual Reality (VR) has proven to be a valuable tool for medical and nursing education. VR simulators are available at any time and from anywhere, and can be used with or without faculty supervision, which results in a significant optimization of time, space, and resources. In this paper we present a highly-configurable session designer for VR-based nursing education following the Standards for QUality Improvement Reporting Excellence: SQUIRE 2.0 and SQUIRE-EDU. Unlike existing platforms, we focus on letting educators quickly customize the training sessions in multiple aspects. This is achieved through the use of a visual editor, where educators can choose the environment and the elements in the session, and a node-based visual system for programming the behavior of the sessions. Thanks to end-user customization, educators can develop different variants of a session, adapt the sessions to new nursing protocols, incorporate new equipment or instruments, or accommodate custom environments. We have implemented the designer and tested it on various nursing training scenarios. The designer can be integrated into VR simulators to help educators save time in delivering highly customizable training.

Scientific Reports, Vol. 14, Num. 2694, pp 1--17, 2024.

DOI: http://dx.doi.org/10.1038/s41598-024-52903-w

DOI: http://dx.doi.org/10.1038/s41598-024-52903-w

BibTeX

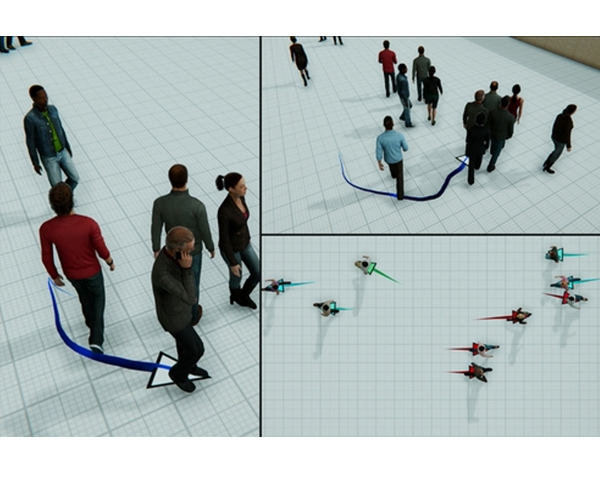

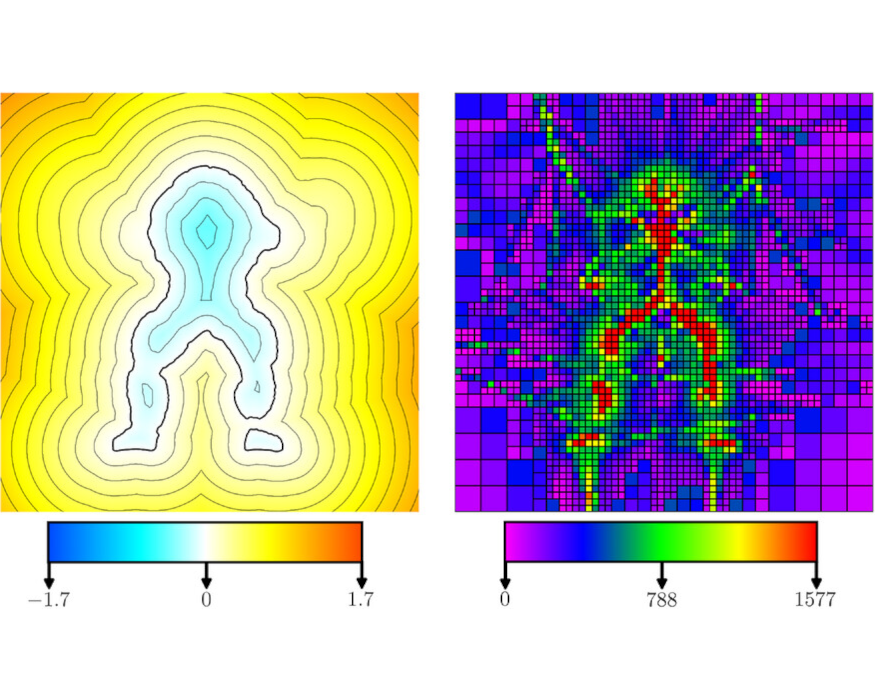

Current statistical models to simulate pandemics miss the most relevant information about the close atomic interactions between individuals which is the key aspect of virus spread. Thus, they lack a proper visualization of such interactions and their impact on virus spread. In the field of computer graphics, and more specifically in computer animation, there have been many crowd simulation models to populate virtual environments. However, the focus has typically been to simulate reasonable paths between random or semi-random locations in a map, without any possibility of analyzing specific individual behavior. We propose a crowd simulation framework to accurately simulate the interactions in a city environment at the individual level, with the purpose of recording and analyzing the spread of human diseases. By simulating the whereabouts of agents throughout the day by mimicking the actual activities of a population in their daily routines, we can accurately predict the location and duration of interactions between individuals, thus having a model that can reproduce the spread of the virus due to human-to-human contact. Our results show the potential of our framework to closely simulate the virus spread based on real agent-to-agent contacts. We believe that this could become a powerful tool for policymakers to make informed decisions in future pandemics and to better communicate the impact of such decisions to the general public.

Heliyon, Cell Press, Vol. 10, Num. 18, 2024.

DOI: http://dx.doi.org/10.1016/j.heliyon.2024.e37608

DOI: http://dx.doi.org/10.1016/j.heliyon.2024.e37608

BibTeX

During the last few years, Bike Sharing Systems (BSS) have become a popular means of transportation in several cities across the world, owing to their low costs and associated advantages. Citizens have adopted these systems as they help improve their health and contribute to creating more sustainable cities. However, customer satisfaction and the willingness to use the systems are directly affected by the ease of access to the docking stations and finding available bikes or slots.

Therefore, system operators and managers major responsibilities focus on urban and transport planning by improving the rebalancing operations of their BSS. Many approaches can be considered to overcome the unbalanced station problem, but predicting the number of arrivals and departures at the docking stations has been proven to be one of the most efficient. In this paper, we study the features that influence the prediction of bikes arrivals and departures in Barcelona BSS, using a Random Forest model and a one-year data period. We considered features related to the weather, the stations characteristics, and the facilities available within a 200-meter diameter of each station, called spatial features. The results indicate that features related to specific months, as well as temperature, pressure, altitude, and holidays, have a strong influence on the model, while spatial features have a small impact on the prediction results.

This study introduces a novel framework for choreographing multi-degree of freedom (MDoF) behaviors in large-scale crowd simulations. The framework integrates multi-objective optimization with spatio-temporal ordering to effectively generate and control diverse MDoF crowd behavior states. We propose a set of evaluation criteria for assessing the aesthetic quality of crowd states and employ multi-objective optimization to produce crowd states that meet these criteria. Additionally, we introduce time offset functions and interpolation progress functions to perform complex and diversified behavior state interpolations. Furthermore, we designed a user-centric interaction module that allows for intuitive and flexible adjustments of crowd behavior states through sketching, spline curves, and other interactive means. Qualitative tests and quantitative experiments on the evaluation criteria demonstrate the effectiveness of this method in generating and controlling MDoF behaviors in crowds. Finally, case studies, including real-world applications in the Opening Ceremony of the 2022 Beijing Winter Olympics, validate the practicality and adaptability of this approach.

Padel scientific journal, Vol. 2, Num. 1, pp 89--106, 2024.

DOI: http://dx.doi.org/10.17398/2952-2218.2.89

DOI: http://dx.doi.org/10.17398/2952-2218.2.89

BibTeX

Recent advances in computer vision and deep learning techniques have opened new possibilities regarding the automatic labeling of sport videos. However, an essen-tial requirement for supervised techniques is the availability of accurately labeled training datasets. In this paper we present PadelVic, an annotated dataset of an ama-teur padel match which consists of multi-view video streams, estimated positional data for all four players within the court (and for one of the players, accurate motion capture data of his body pose), as well as synthetic videos specifically designed to serve as training sets for neural networks estimating positional data from videos. For the recorded data, player positions were estimated by applying a state-of-the-art pose estimation technique to one of the videos, which yields a relatively small positional error (M=16 cm, SD=13 cm). For one of the players, we used a motion capture system providing the orientation of the body parts with an accuracy of 1.5º RMS. The highest accuracy though comes from our synthetic dataset, which provides ground-truth po-sitional and pose data of virtual players animated with the motion capture data. As an example application of the synthetic dataset, we present a system for a more accurate prediction of the center-of-mass of the players projected onto the court plane, from a single-view video of the match. We also discuss how to exploit per-frame positional data of the players for tasks such as synergy analysis, collective tactical analysis, and player profile generation.

IEEE Computer Graphics and Applications, Vol. 44, Num. 4, pp 79--88, 2024.

DOI: http://dx.doi.org/10.1109/MCG.2024.3406139

DOI: http://dx.doi.org/10.1109/MCG.2024.3406139

BibTeX

Recent developments in extended reality (XR) are already demonstrating the benefits of this technology in the educational sector. Unfortunately, educators may not be familiar with XR technology and may find it difficult to adopt this technology in their classrooms. This article presents the overall architecture and objectives of an EU-funded project dedicated to XR for education, called Extended Reality for Education (XR4ED). The goal of the project is to provide a platform, where educators will be able to build XR teaching experiences without the need to have programming or 3-D modeling expertise. The platform will provide the users with a marketplace to obtain, for example, 3-D models, avatars, and scenarios; graphical user interfaces to author new teaching environments; and communication channels to allow for collaborative virtual reality (VR). This article describes the platform and focuses on a key aspect of collaborative and social XR, which is the use of avatars. We show initial results on a) a marketplace which is used for populating educational content into XR environments, b) an intelligent augmented reality assistant that communicates between nonplayer characters and learners, and c) self-avatars providing nonverbal communication in collaborative VR.

Computers & Graphics (special issue VCBM), Vol. 124, 2024.

DOI: http://dx.doi.org/10.1016/j.cag.2024.104059

DOI: http://dx.doi.org/10.1016/j.cag.2024.104059

BibTeX

Understanding the packing of long DNA strands into chromatin is one of the ultimate challenges in genomic

research. An intrinsic part of this complex problem is studying the chromatin’s spatial structure. Biologists

reconstruct 3D models of chromatin from experimental data, yet the exploration and analysis of such 3D

structures is limited in existing genomic data visualization tools. To improve this situation, we investigated

the current options of immersive methods and designed a prototypical VR visualization tool for 3D chromatin

models that leverages virtual reality to deal with the spatial data. We showcase the tool in three primary

use cases. First, we provide an overall 3D shape overview of the chromatin to facilitate the identification

of regions of interest and the selection for further investigation. Second, we include the option to export the

selected regions and elements in the BED format, which can be loaded into common analytical tools. Third, we

integrate epigenetic modification data along the sequence that influence gene expression, either as in-world 2D

charts or overlaid on the 3D structure itself. We developed our application in collaboration with two domain

experts and gathered insights from two informal studies with five other experts.

Digital 3D models for medieval heritage: diachronic analysis and documentation of its architecture and paintings

In this paper, we discuss the requirements and technical challenges within the EHEM project, Enhancement of Heritage Experiences: The Middle Ages, an ongoing research program for the acquisition, analysis, documentation, interpretation, digital restoration, and communication of medieval artistic heritage. The project involves multidisciplinary teams comprising art historians and visual computing experts. Despite the vast literature on digital 3D models in support of Cultural Heritage, the field is so rich and diverse that specific projects often imply distinct, unique requirements which often challenge the computational technologies and suggest new research opportunities. As good representatives of such diversity, we describe the three monuments that serve as test cases for the project, all of them with a rich history of architecture and paintings. We discuss the art historians’ view of how digital models can support their research, the expertise and technological solutions adopted so far, as well as the technical challenges in multiple areas spanning geometry and appearance acquisition, color analysis and digital restitution, as well as the representation of the profound transformations due to the alterations suffered over the centuries.

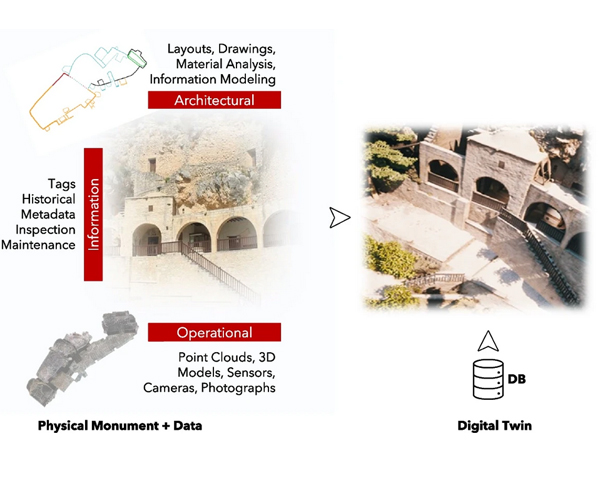

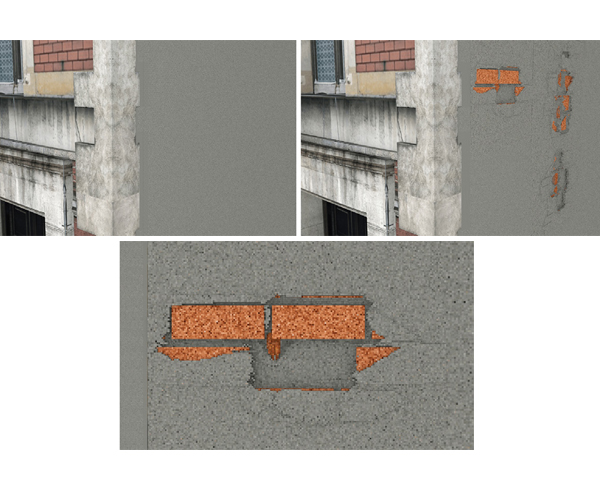

A 3D feature-based approach for mapping scaling effects on stone monuments

Weathering effects caused by physical, chemical, or biological processes result in visible damages that alter the appearance of stones’ surfaces. Consequently, weathered stone monuments can offer a distorted perception of the artworks to the point of making their interpretation misleading. Being able to detect and monitor decay is crucial for restorers and curators to perform important tasks such as identifying missing parts, assessing the preservation state, or evaluating curating strategies. Decay mapping, the process of identifying weathered zones of artworks, is essential for preservation and research projects. This is usually carried out by marking the affected parts of the monument on a 2D drawing or picture of it. One of the main problems of this methodology is that it is manual work based only on experts’ observations. This makes the process slow and often results in disparities between the mappings of the same monument made by different experts. In this paper, we focus on the weathering effect known as “scaling”, following the ICOMOS ISCS definition. We present a novel technique for detecting, segmenting, and classifying these effects on stone monuments. Our method is user-friendly, requiring minimal user input. By analyzing 3D reconstructed data considering geometry and appearance, the method identifies scaling features and segments weathered regions, classifying them by scaling subtype. It shows improvements over previous approaches and is well-received by experts, representing a significant step towards objective stone decay mapping.

An important challenge of Digital Cultural Heritage is to contribute to the recovery of artworks with their original shape and appearance. Many altarpieces, which are very relevant Christian art elements, have been damaged and/or, partly or fully, lost. Therefore, the only way to recover them is to carry out their digital reconstruction. Although the procedure that we present here is valid for any altarpiece with similar characteristics, and even for other akin elements, our test bench is the altarpieces damaged, destroyed, or disappeared during the Spanish Civil War (1936-1939) in Catalonia where most suffered these effects. The first step of our work has been the classification of these artworks into different categories on the basis of their degree of destruction and of the available visual information related to each one.

This paper proposes, for the first time to our knowledge, a workflow for the virtual reconstruction, through photogrammetry, digital modeling, and digital color restoration; of whole altarpieces partially preserved with very little visual information. Our case study is the Rosary’s altarpiece of Sant Pere Màrtir de Manresa church. Currently, this altarpiece is partially preserved in fragments in the Museu Comarcal de Manresa (Spain). But, it can not be reassembled physically owing to the lack of space (actually the church does not exist anymore) and the cost of such an operation. Thus, there is no other solution than the digital one to contemplate and study the altarpiece as a whole. The reconstruction that we provide allows art historians and the general public to virtually see the altarpiece complete and assembled as it was until 1936. The results obtained also allow us to see in detail the reliefs and ornaments of the altarpiece with their digitally restored color.

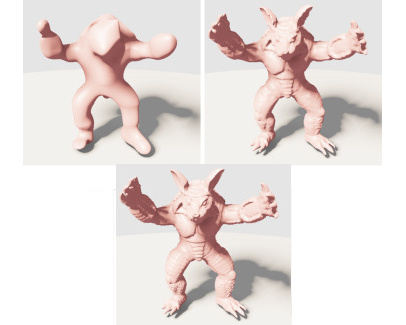

Deep weathering effects

Computers & Graphics, Vol. 112, pp 40--49, 2023.

DOI: http://dx.doi.org/10.1016/j.cag.2023.03.006

DOI: http://dx.doi.org/10.1016/j.cag.2023.03.006

BibTeX

Computers & Graphics, Vol. 114, pp 306--315, 2023.

DOI: http://dx.doi.org/10.1016/j.cag.2023.06.019

DOI: http://dx.doi.org/10.1016/j.cag.2023.06.019

BibTeX

We present a novel proposal for modeling complex dynamic terrains that offers real-time rendering, dynamic updates and physical interaction of entities simultaneously. We can capture any feature from landscapes including tunnels, overhangs and caves, and we can conduct a total destruction of the terrain. Our approach is based on a Constructive Solid Geometry tree, where a set of spheres are subtracted from a base Digital Elevation Model. Erosions on terrain are easily and efficiently carried out with a spherical sculpting tool with pixel-perfect accuracy. Real-time rendering performance is achieved by applying a one-direction CPU–GPU communication strategy and using the standard depth and stencil buffer functionalities provided by any graphics processor.

Transactions on Graphics, Vol. 42, Num. 4, pp 15, 2023.

DOI: http://dx.doi.org/10.1145/3592459

DOI: http://dx.doi.org/10.1145/3592459

BibTeX

Simulating crowds with realistic behaviors is a difficult but very important task for a variety of applications. Quantifying how a person balances between different conflicting criteria such as goal seeking,collision avoidance and moving within a group is not intuitive, especially if we consider that behaviors differ largely between people. Inspired by recent advances in Deep Reinforcement Learning, we propose Guided REinforcement Learning (GREIL) Crowds, a method that learns a model for pedestrian behaviors which is guided by reference crowd data. The model successfully captures behaviors such as goal seeking, being part of consistent groups without the need to define explicit relationships and wandering around seemingly without a specific purpose. Two fundamental concepts are important in achieving these results: (a) the per agent state representation and (b) the reward function. The agent state is a temporal representation of the situation around each agent. The reward function is based on the idea that people try to move in situations/states in which they feel comfortable in. Therefore, in order for agents to stay in a comfortable state space, we first obtain a distribution of states extracted from real crowd data; then we evaluate states based on how much of an outlier they are compared to such a distribution. We demonstrate that our system can capture and simulate many complex and subtle crowd interactions in varied scenarios. Additionally, the proposed method generalizes to unseen situations, generates consistent behaviors and does not suffer from the limitations of other data-driven and reinforcement learning approaches.

Public Bicycle Sharing Systems (BSS) have spread in many cities for the last decade. The need

of analysis tools to predict the behavior or estimate balancing needs has fostered a wide set

of approaches that consider many variables. Often, these approaches use a single scenario to

evaluate their algorithms, and little is known about the applicability of such algorithms in BSS of

different sizes. In this paper, we evaluate the performance of widely known prediction algorithms

for three sized scenarios: a small system, with around 20 docking stations, a medium-sized one,

with 400+ docking stations, and a large one, with more than 1500 stations. The results show that

Prophet and Random Forest are the prediction algorithms with more consistent results, and that

small systems often have not enough data for the algorithms to perform a solid work.

Computer Graphics Forum, Vol. 42, Num. 6, 2023.

DOI: http://dx.doi.org/doi.org/10.1111/cgf.14738

DOI: http://dx.doi.org/doi.org/10.1111/cgf.14738

BibTeX

Visualization plays a crucial role in molecular and structural biology. It has been successfully applied to a variety of tasks,including structural analysis and interactive drug design. While some of the challenges in this area can be overcome with moreadvancedvisualizationandinteractiontechniques,othersarechallengingprimarilyduetothelimitationsofthehardwaredevicesused to interact with the visualized content. Consequently, visualization researchers are increasingly trying to take advantageof new technologies to facilitate the work of domain scientists. Some typical problems associated with classic 2D interfaces,such as regular desktop computers, are a lack of natural spatial understanding and interaction, and a limited field of view.These problems could be solved by immersive virtual environments and corresponding hardware, such as virtual reality head-mounted displays. Thus, researchers are investigating the potential of immersive virtual environments in the field of molecularvisualization. There is already a body of work ranging from educational approaches to protein visualization to applications forcollaborative drug design. This review focuses on molecular visualization in immersive virtual environments as a whole, aimingto cover this area comprehensively. We divide the existing papers into different groups based on their application areas, and typesof tasks performed. Furthermore, we also include a list of available software tools. We conclude the report with a discussion ofpotential future research on molecular visualization in immersive environments.

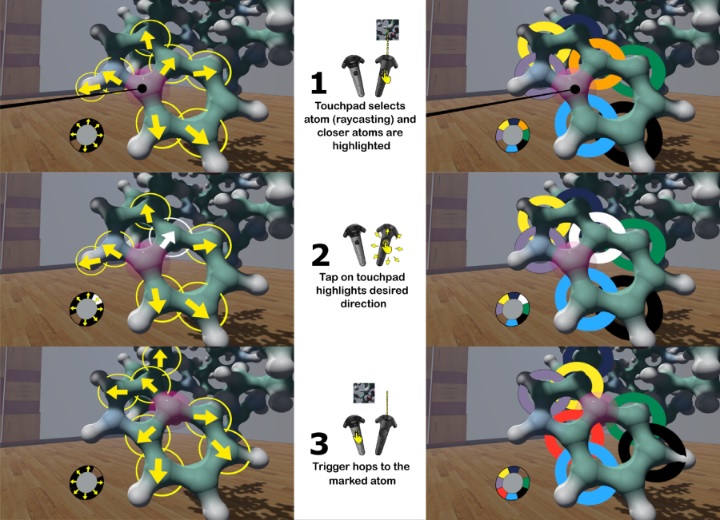

One of the key interactions in 3D environments is target acquisition, which can be challenging when targets are small or in cluttered scenes. Here, incorrect elements may be selected, leading to frustration and wasted time. The accuracy is further hindered by the physical act of selection itself, typically involving pressing a button. This action reduces stability, increasing the likelihood of erroneous target acquisition. We focused on molecular visualization and on the challenge of selecting atoms, rendered as small spheres. We present two techniques that improve upon previous progressive selection techniques. They facilitate the acquisition of neighbors after an initial selection, providing a more comfortable experience compared to using classical ray-based selection, particularly with occluded elements. We conducted a pilot study followed by two formal user studies. The results indicated that our approaches were highly appreciated by the participants. These techniques could be suitable for other crowded environments as well.

Computers & Graphics, Vol. 114, pp 316--325, 2023.

DOI: http://dx.doi.org/10.1016/j.cag.2023.06.021

DOI: http://dx.doi.org/10.1016/j.cag.2023.06.021

BibTeX

Digital color restitution aims to digitally restore the original colors of a painting. Existing image editing applications can be used for this purpose, but they require a select-and-edit workflow and thus they do not scale well to large collections of paintings or different regions of the same painting. To address this issue, we propose an automated workflow that requires only a few representative source colors and associated target colors as input from art historians. The system then creates a control grid to model a deformation of the CIELAB color space. Such deformation can be applied to arbitrary images of the same painting. The proposed approach is suitable for restituting the color of images from a large photographic campaign, as well as for the textures of 3D reconstructions of a monument. We demonstrate the benefits of our method on a collection of mural paintings from a medieval monument.

Diagnostics, Vol. 13, Num. 5, pp 1--10, 2023.

DOI: http://dx.doi.org/10.3390/diagnostics13050910

DOI: http://dx.doi.org/10.3390/diagnostics13050910

BibTeX

The analysis of colonic contents is a valuable tool for the gastroenterologist and has multiple applications in clinical routine. When considering magnetic resonance imaging (MRI) modalities, T2 weighted images are capable of segmenting the colonic lumen, whereas fecal and gas contents can only be distinguished in T1 weighted images. In this paper, we present an end-to-end quasi-automatic framework that comprises all the steps needed to accurately segment the colon in T2 and T1 images and to extract colonic content and morphology data to provide the quantification of colonic content and morphology data. As a consequence, physicians have gained new insights into the effects of diets and the mechanisms of abdominal distension.

Virtual Reality, Vol. 27, pp 2541--2560, 2023.

DOI: http://dx.doi.org/10.1007/s10055-023-00821-z

DOI: http://dx.doi.org/10.1007/s10055-023-00821-z

BibTeX

In the era of the metaverse, self-avatars are gaining popularity, as they can enhance presence and provide embodiment when a user is immersed in Virtual Reality. They are also very important in collaborative Virtual Reality to improve communication through gestures. Whether we are using a complex motion capture solution or a few trackers with inverse kinematics (IK), it is essential to have a good match in size between the avatar and the user, as otherwise mismatches in self-avatar posture could be noticeable for the user. To achieve such a correct match in dimensions, a manual process is often required, with the need for a second person to take measurements of body limbs and introduce them into the system. This process can be time-consuming, and prone to errors. In this paper, we propose an automatic measuring method that simply requires the user to do a small set of exercises while wearing a Head-Mounted Display (HMD), two hand controllers, and three trackers. Our work provides an affordable and quick method to automatically extract user measurements and adjust the virtual humanoid skeleton to the exact dimensions. Our results show that our method can reduce the misalignment produced by the IK system when compared to other solutions that simply apply a uniform scaling to an avatar based on the height of the HMD, and make assumptions about the locations of joints with respect to the trackers.

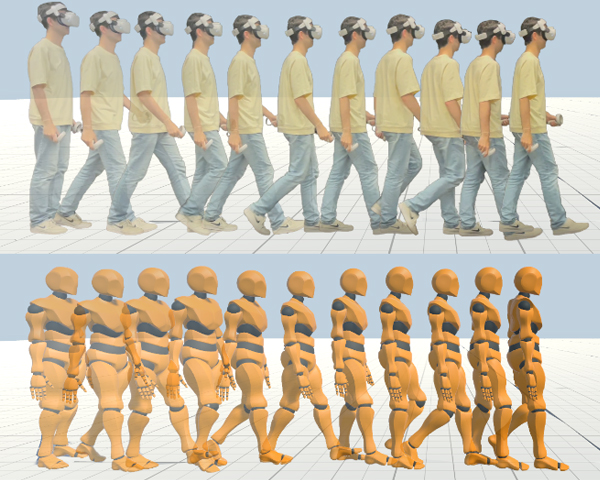

SparsePoser: Real-Time Full-Body Motion Reconstruction from Sparse Data

ACM Transactions on Graphics, Vol. 43, Num. 1, pp 1--14, 2023.

DOI: http://dx.doi.org/doi.org/10.1145/3625264

DOI: http://dx.doi.org/doi.org/10.1145/3625264

BibTeX

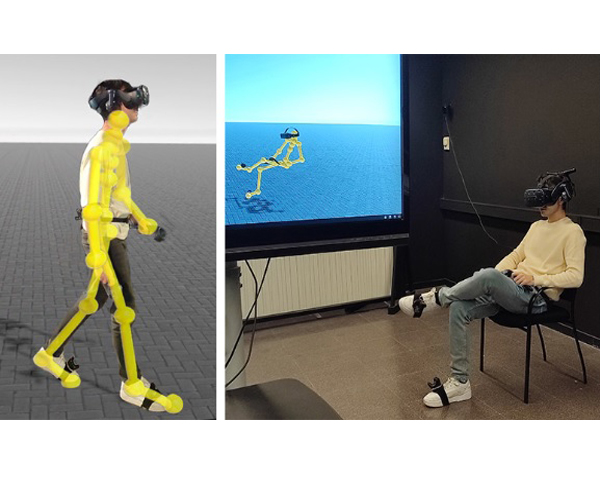

Accurate and reliable human motion reconstruction is crucial for creating

natural interactions of full-body avatars in Virtual Reality (VR) and entertain-

ment applications. As the Metaverse and social applications gain popularity,

users are seeking cost-effective solutions to create full-body animations that

are comparable in quality to those produced by commercial motion capture

systems. In order to provide affordable solutions though, it is important

to minimize the number of sensors attached to the subject’s body. Unfor-

tunately, reconstructing the full-body pose from sparse data is a heavily

under-determined problem. Some studies that use IMU sensors face chal-

lenges in reconstructing the pose due to positional drift and ambiguity of the

poses. In recent years, some mainstream VR systems have released 6-degree-

of-freedom (6-DoF) tracking devices providing positional and rotational

information. Nevertheless, most solutions for reconstructing full-body poses

rely on traditional inverse kinematics (IK) solutions, which often produce

non-continuous and unnatural poses. In this paper, we introduce Sparse-

Poser, a novel deep learning-based solution for reconstructing a full-body

pose from a reduced set of six tracking devices. Our system incorporates a

convolutional-based autoencoder that synthesizes high-quality continuous

human poses by learning the human motion manifold from motion capture data. Then, we employ a learned IK component, made of multiple light-

weight feed-forward neural networks, to adjust the hands and feet towards

the corresponding trackers. We extensively evaluate our method on publicly

available motion capture datasets and with real-time live demos. We show

that our method outperforms state-of-the-art techniques using IMU sensors

or 6-DoF tracking devices, and can be used for users with different body

dimensions and proportions.

Computer Graphics Forum, Vol. 42, Num. 6, pp e14861, 2023.

DOI: http://dx.doi.org/10.1111/cgf.14861

DOI: http://dx.doi.org/10.1111/cgf.14861

BibTeX

We present an acceleration structure to efficiently query the Signed Distance Field (SDF) of volumes represented by triangle meshes. The method is based on a discretization of space. In each node, we store the triangles defining the SDF behaviour in that region. Consequently, we reduce the cost of the nearest triangle search, prioritizing query performance, while avoiding approximations of the field. We propose a method to conservatively compute the set of triangles influencing each node. Given a node, each triangle defines a region of space such that all points inside it are closer to a point in the node than the triangle is. This property is used to build the SDF acceleration structure. We do not need to explicitly compute these regions, which is crucial to the performance of our approach. We prove the correctness of the proposed method and compare it to similar approaches, confirming that our method produces faster query times than other exact methods.

Computers & Graphics, Vol. 114, pp 337--346, 2023.

DOI: http://dx.doi.org/10.1016/j.cag.2023.06.020

DOI: http://dx.doi.org/10.1016/j.cag.2023.06.020

BibTeX

In this paper, we present an adaptive structure to represent a signed distance field through trilinear or tricubic interpolation of values, and derivatives, that allows for fast querying of the field. We also provide a method to decide when to subdivide a node to achieve a provided threshold error. Both the numerical error control, and the values needed to build the interpolants, require the evaluation of the input field. Still, both are designed to minimize the total number of evaluations.

C0 continuity is guaranteed for both the trilinear and tricubic version of the algorithm. Furthermore, we describe how to preserve C0 continuity between nodes of different levels when using a tricubic interpolant, and provide a proof that this property is maintained. Finally, we illustrate the usage of our approach in several applications, including direct rendering using sphere marching.

Journal of Applied Sciences, special issue AI Applied to Data Visualization, Vol. 13, Num. 17, 2023.

DOI: http://dx.doi.org/doi.org/10.3390/app13179967

DOI: http://dx.doi.org/doi.org/10.3390/app13179967

BibTeX

In data science and visualization, dimensionality reduction techniques have been extensively employed for exploring large datasets. These techniques involve the transformation of high-dimensional data into reduced versions, typically in 2D, with the aim of preserving significant properties from the original data. Many dimensionality reduction algorithms exist, and nonlinear approaches such as the t-SNE (t-Distributed Stochastic Neighbor Embedding) and UMAP (Uniform Manifold Approximation and Projection) have gained popularity in the field of information visualization. In this paper, we introduce a simple yet powerful manipulation for vector datasets that modifies their values based on weight frequencies. This technique significantly improves the results of the dimensionality reduction algorithms across various scenarios. To demonstrate the efficacy of our methodology, we conduct an analysis on a collection of well-known labeled datasets. The results demonstrate improved clustering performance when attempting to classify the data in the reduced space. Our proposal presents a comprehensive and adaptable approach to enhance the outcomes of dimensionality reduction for visual data exploration.

ACM SIGGRAPH / Eurographics Symposium on Computer Animation (SCA'2022), 2022.

DOI: http://dx.doi.org/10.1111/cgf.14628

DOI: http://dx.doi.org/10.1111/cgf.14628

BibTeX

The animation of user avatars plays a crucial role in conveying their pose, gestures, and relative distances to virtual objects or other users. Consumer-grade VR devices typically include three trackers: the Head Mounted Display (HMD) and two handheld VR controllers. Since the problem of reconstructing the user pose from such sparse data is ill-defined, especially for the lower body, the approach adopted by most VR games consists of assuming the body orientation matches that of the HMD, and applying animation blending and time-warping from a reduced set of animations. Unfortunately, this approach produces noticeable mismatches between user and avatar movements. In this work we present a new approach to animate user avatars for current mainstream VR devices. First, we use a neural network to estimate the user’s body orientation based on the tracking information from the HMD and the hand controllers. Then we use this orientation together with the velocity and rotation of the HMD to build a feature vector that feeds a Motion Matching algorithm. We built a MoCap database with animations of VR users wearing a HMD and used it to test our approach on both self-avatars and other users’ avatars. Our results show that our system can provide a large variety of lower body animations while correctly matching the user orientation, which in turn allows us to represent not only forward movements but also stepping in any direction.

H3DViRViC Conferences Results

Research-oriented universities often comprise numerous researchers of various types and possess complex research structures

that encompass research groups, departments, laboratories, and research institutes. In this situation, understanding the univer-

sity’s strengths and areas of excellence requires careful examination. Additionally, individuals at different levels of governance

(e.g., department heads, directors of research institutes, rectors) may seek to establish synergies among researchers to tackle is-

sues such as international project applications or industry technology transfer. University officials and faculty members frequently

require the expertise of specific research groups or individuals, but struggle to obtain this information beyond their personal net-

works. This limits their ability to locate necessary resources effectively. Fortunately, most institutions have databases containing

publications that could provide valuable insights into areas of strength within the university. In this article, we present a visual

analysis application capable of addressing these questions and assisting management in making informed decisions regarding

governance measures such as creating new research institutes. Our system has been evaluated by domain experts, who found it

highly beneficial and expressed interest in utilising it regularly.

VCBM 2023: Eurographics Workshop on Visual Computing for Biology and Medicine, pp 57--61, 2023.

DOI: http://dx.doi.org/10.2312/vcbm.20232019

DOI: http://dx.doi.org/10.2312/vcbm.20232019

BibTeX

The segmentation of medical models is a complex and time-intensive process required for both diagnosis and surgical preparation. Despite the advancements in deep learning, neural networks can only automatically segment a limited number of structures, often requiring further validation by a domain expert. In numerous instances, manual segmentation is still necessary. Virtual Reality (VR) technology can enhance the segmentation process by providing improved perception of segmentation outcomes and enabling interactive supervision by experts. But inspecting how the progress of the segmentation algorithm is evolving, and defining new seeds requires seeing the inner layers of the volume, which can be costly and difficult to achieve with typical metaphors such as clipping planes. In this paper, we introduce a wedge-shaped 3D interaction metaphor designed to facilitate VR-based segmentation through detailed inspection and guidance. User evaluations demonstrated increased satisfaction with usability and faster task completion times using the tool.

H3DViRViC PhD Thesis Results